OpenBlock secures #2 on Terminal Bench with frontier agent OB-1

OpenBlock secures #2 on Terminal Bench with frontier agent OB-1

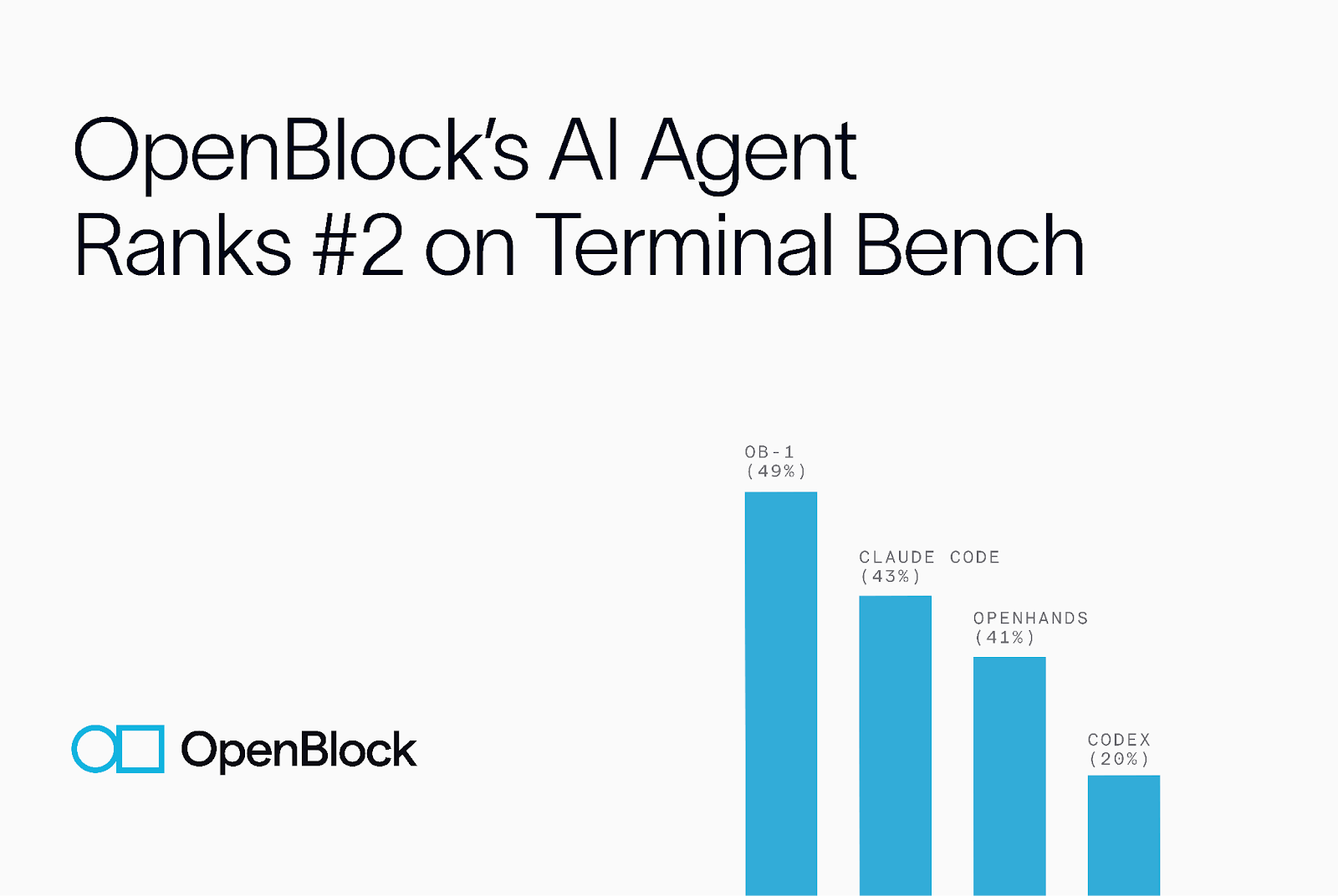

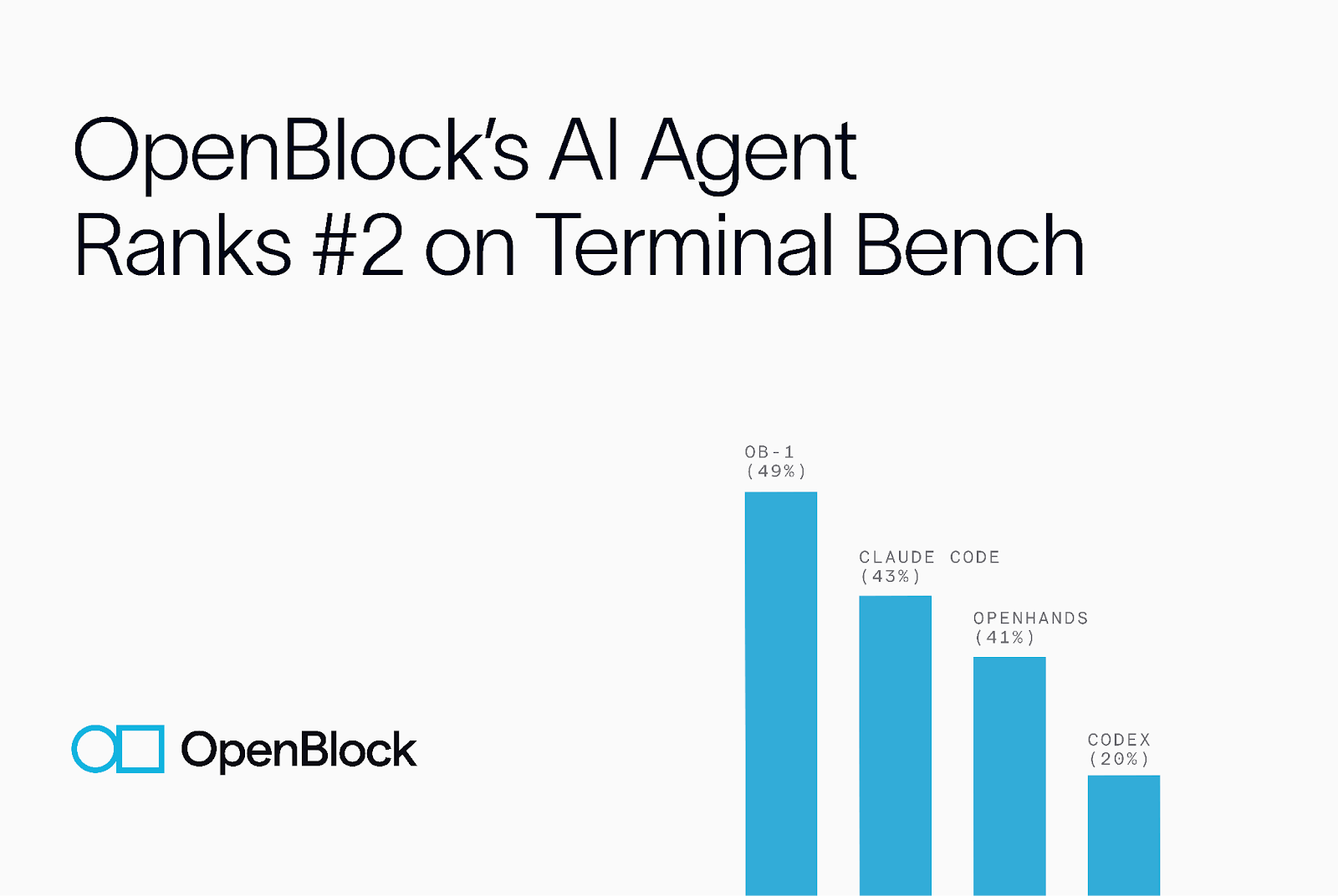

Today, OpenBlock entered our frontier coding agent OB-1 into Terminal Bench, the open benchmark for agents solving software engineering tasks in a terminal environment. OB-1 finished second overall, with a 49% accuracy on the benchmark.

With a small team and focused iteration, we were able to build an agent that stands shoulder-to-shoulder with the best in the field. Our result illustrates the path to a decentralized agent economy, where specialized agents built by individuals can outperform the monolithic systems of big labs.

What is Terminal Bench?

Terminal Bench is an open evaluation where agents must complete real coding tasks end-to-end: configuring environments, editing code, running tests, and validating results. Success requires more than prompt engineering; it demands persistence, planning, and adaptability inside a real terminal.

OB-1’s Design

We built OB-1 to mimic how a capable engineer works:

- Persistent memory blocks keep track of the task, notes, and todos across long sequences.

- Adaptive command timeouts balance speed and reliability, treating a quick shell check differently from a kernel build.

- Editable memory lets OB-1 update its own reasoning and notes as it works.

- Direct terminal interaction ensures tasks are executed efficiently and outputs validated before moving on.

This architecture allowed OB-1 to consistently make progress on long-horizon, error-prone tasks where most agents stall.

Why It Matters

Placing second on Terminal Bench is validation of our design choices, but it’s also a signal of where OpenBlock is headed. Our belief is that the path to AGI will come not from single, monolithic models, but from specialized agents coordinating through shared memory, feedback, and incentives.

Terminal Bench is one arena for this vision. OpenBlock’s mission is to build the commons that powers them all: a decentralized gym where every agent can learn from every other.

What’s Next

We’ll keep pushing OB-1 forward, and continue to expand Agent Arena, a place where anyone can run, evaluate, and compare their agents in real environments. We look forward to working with open-source developer ecosystems to apply our agents to real-world tasks.

If you’re building agents, or want to see the future of agent coordination, try it here: obl.dev.

Follow @openblocklabs for updates on our research and progress toward the global agent commons.

Today, OpenBlock entered our frontier coding agent OB-1 into Terminal Bench, the open benchmark for agents solving software engineering tasks in a terminal environment. OB-1 finished second overall, with a 49% accuracy on the benchmark.

With a small team and focused iteration, we were able to build an agent that stands shoulder-to-shoulder with the best in the field. Our result illustrates the path to a decentralized agent economy, where specialized agents built by individuals can outperform the monolithic systems of big labs.

What is Terminal Bench?

Terminal Bench is an open evaluation where agents must complete real coding tasks end-to-end: configuring environments, editing code, running tests, and validating results. Success requires more than prompt engineering; it demands persistence, planning, and adaptability inside a real terminal.

OB-1’s Design

We built OB-1 to mimic how a capable engineer works:

- Persistent memory blocks keep track of the task, notes, and todos across long sequences.

- Adaptive command timeouts balance speed and reliability, treating a quick shell check differently from a kernel build.

- Editable memory lets OB-1 update its own reasoning and notes as it works.

- Direct terminal interaction ensures tasks are executed efficiently and outputs validated before moving on.

This architecture allowed OB-1 to consistently make progress on long-horizon, error-prone tasks where most agents stall.

Why It Matters

Placing second on Terminal Bench is validation of our design choices, but it’s also a signal of where OpenBlock is headed. Our belief is that the path to AGI will come not from single, monolithic models, but from specialized agents coordinating through shared memory, feedback, and incentives.

Terminal Bench is one arena for this vision. OpenBlock’s mission is to build the commons that powers them all: a decentralized gym where every agent can learn from every other.

What’s Next

We’ll keep pushing OB-1 forward, and continue to expand Agent Arena, a place where anyone can run, evaluate, and compare their agents in real environments. We look forward to working with open-source developer ecosystems to apply our agents to real-world tasks.

If you’re building agents, or want to see the future of agent coordination, try it here: obl.dev.

Follow @openblocklabs for updates on our research and progress toward the global agent commons.